Compilers can suddenly and silently introduce implementation vulnerabilities in yesterday’s secure code. PQShield recently discovered an instance of this problem in the popular ML-KEM (Kyber) reference implementation, and it has been resolved with the help of Peter Schwabe and the Kyber team. In this article, we investigate the issue in detail, and take a deep-dive into its root cause, as well as explaining how the problem was resolved.

It’s about time

Even the most secure cryptographic algorithm can be rendered ineffective if its implementation contains vulnerabilities. A key part of implementation security is resistance against side-channel attacks, which exploit the physical side-effects of cryptographic computations to infer sensitive information.

To remain secure against side-channel attacks, cryptographic algorithms must be implemented such that no attacker-observable effect of their execution depends on the secrets they process. In this blog post, we’re concerned with a particular side channel that’s observable in almost all cryptographic deployment scenarios: time.

Constant time cryptography

Let’s assume we want to deploy a cryptographic algorithm across a wide range of platforms. How would a skilled cryptography engineer write portable code that doesn’t leak its secrets through timing?

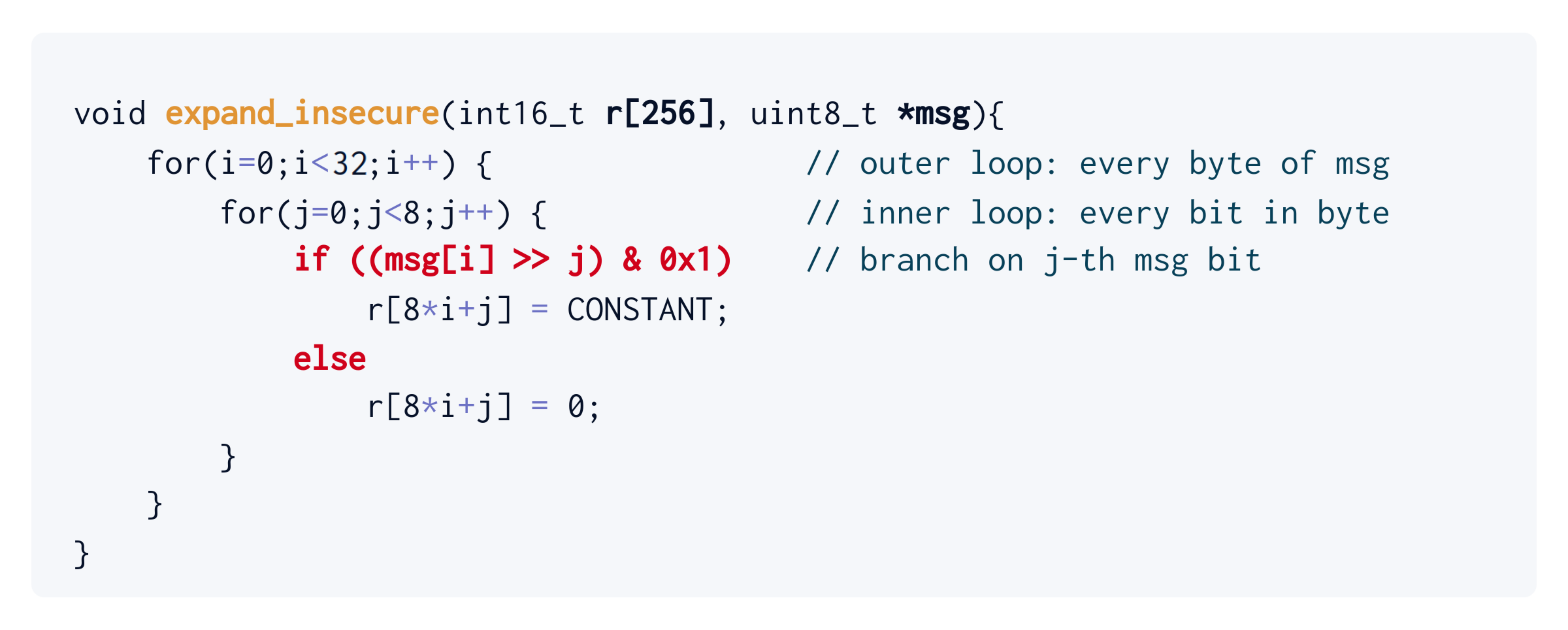

Consider a toy example. Let’s say you have to write a function that expands a 256-bit string msg into an array r of 256 integers of 16 bits. In particular, r[j] needs to be populated with some constant if the j-th bit of msg is set, and zero otherwise.

A novice cryptography engineer might come up with a C routine that’s something like this:

It’s good, but (regarding our earlier side-channel discussion) there is an issue with this piece of code. Its control flow (i.e, the sequence of operations it performs) depends on the bits of msg. The part of the code where the control flow diverges is marked in red.

Does that matter? Well, that depends on how this particular function is used: it does matter if msg is supposed to be secret, since it is effectively getting encoded, bit-by-bit, in the control flow of the program.

Let’s write a secure version of this function.

To take the secret bits into account without branching on them, let’s use a common trick in cryptography engineering: bit masks. We can negate the message bit to produce a mask that is either all-zeros (bit j is zero) or all-ones (bit j is one). We can then use the mask to either zero out the constant (bit j is zero) or load it as-is (bit j is one). Here is the complete function:

Notice that the control flow of expand_secure is independent of the bits of msg. The assignment to r happens regardless of the message bit, and the mask ensures the correct data flow. So, all is well! Right?

Compiler woes

When a developer writes code in a portable high-level language like C, C++, or Rust, the source code is not executed directly on the machine. Instead, it is first compiled into assembly or machine code by a compiler, such as GCC or Clang for C/C++, so that it can run natively on the specific target machine.

Compilers not only perform this transformation to machine code, they also have several optimization passes. Well-known examples are loop unrolling and the removal of code that does not affect the program’s output. But there are many, many more. Modern compilers have become very proficient at performing optimizations to generate fast machine code from portable high-level source code.

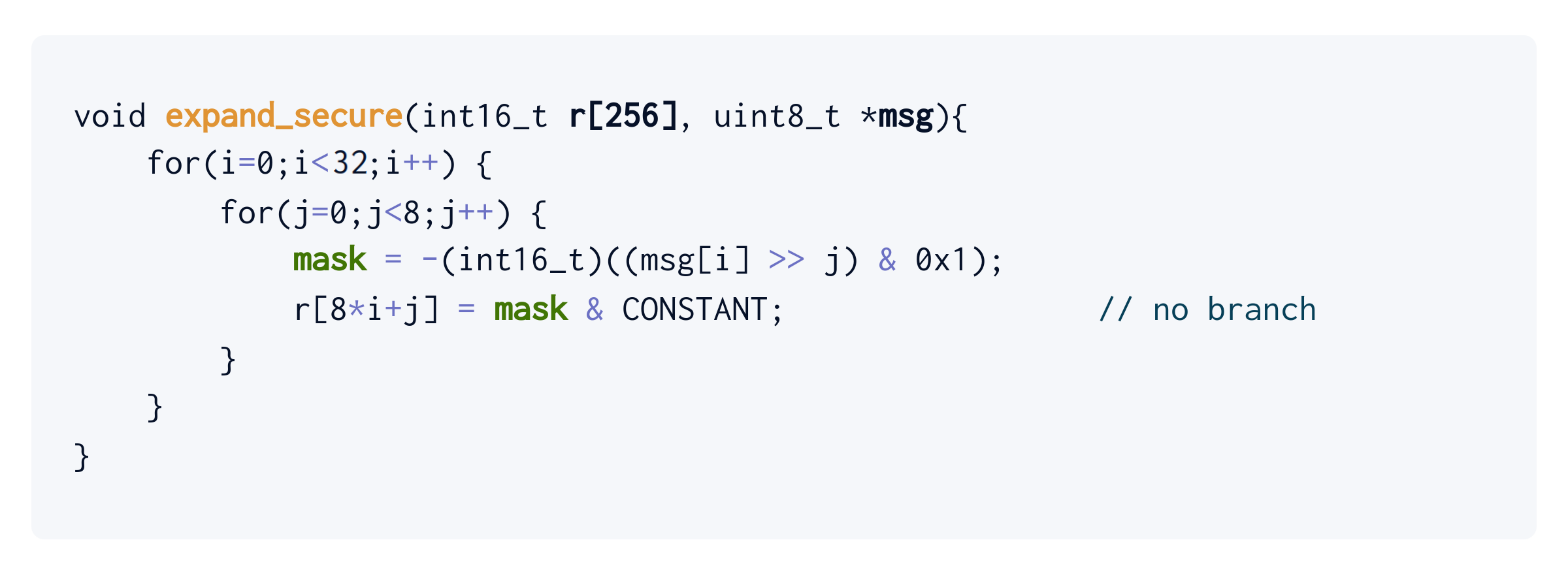

Let’s take a look at a state-of-the-art mainstream compiler in action. Returning to our toy example, let’s compile the insecure expand_insecure for x86 using the most recent version of Clang (v18), optimizing for code size (-Os):

The vulnerability we identified before obviously hasn’t magically disappeared. It can be observed in the inner loop, and is marked in red. The move of 1665 (our choice of constant) into edx is either skipped or not skipped, depending on the relevant bit of msg.

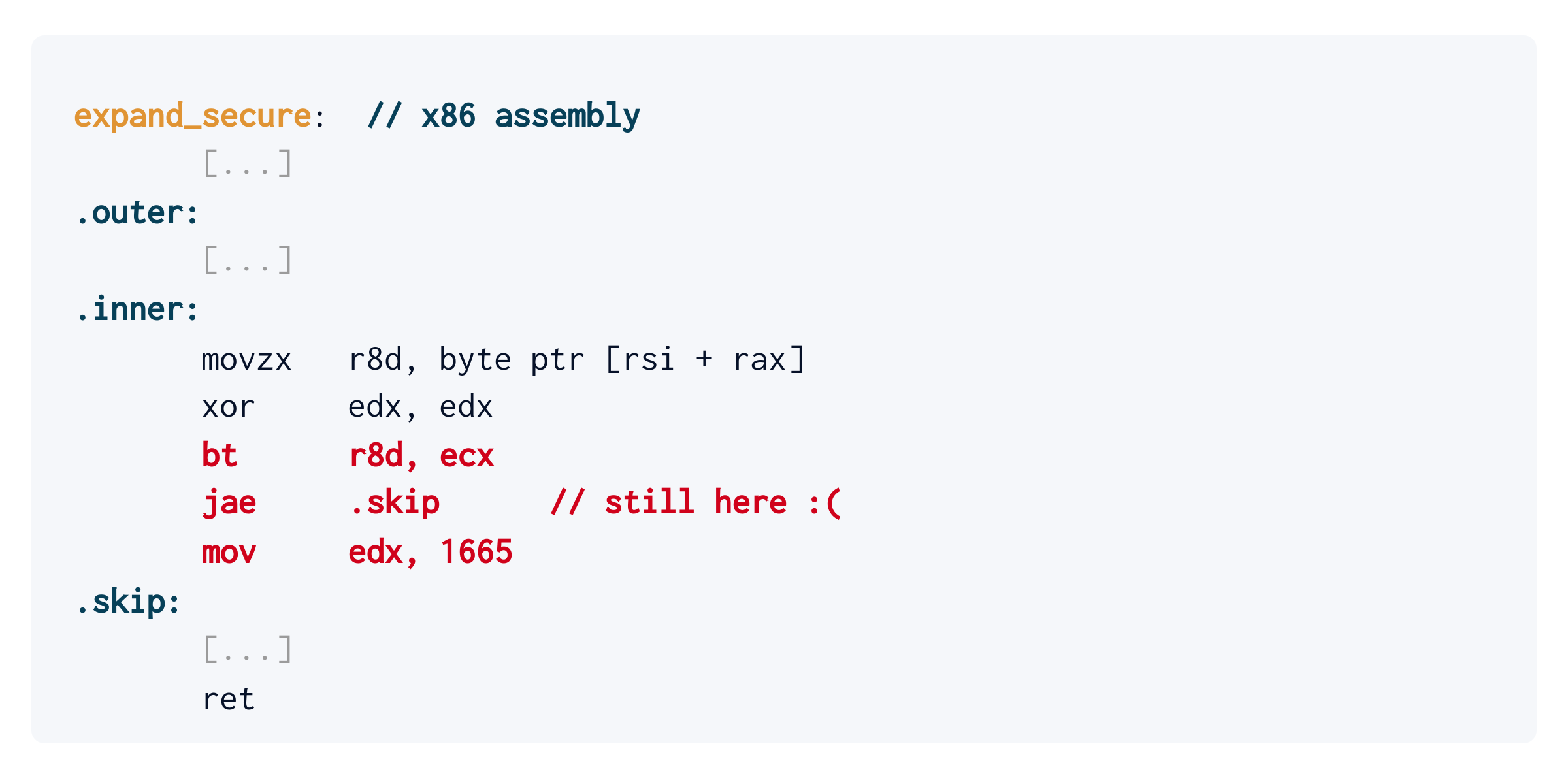

Now let’s compile the secure expand_secure implementation and see how it differs – showing only the inner loop for brevity:

… there must have been a mix-up! Really? Isn’t it the same assembly? What happened?

It appears that Clang eagerly:

- noticed that the mask is either all-zero or all-one,

- inferred that the masked load is therefore either zero or CONSTANT,

- emitted a branch, because it is heuristically the fastest on x86

So, we can see here that the compiler can silently undo measures taken by the skilled implementer. This behaviour is not limited to the configuration above (clang -Os). If you play around with compilers and options, you’ll notice this compiler-induced security regression in expand_secure for at least:

- Versions: x86 Clang 15, 16, 17 and 18

- Options: -Os, -O1, -O2 -fno-vectorize and -O3 -fno-vectorize

In the past, some other secure source patterns have also been shown to be compiled into vulnerable machine code.

What about ML-KEM?

ML-KEM (Kyber) is the soon-to-be standard for post-quantum key encapsulation (FIPS-203). It turns out that the routine we described earlier isn’t just a toy example; it’s actually an important routine in ML-KEM, and it’s needed in both key encapsulation and decapsulation. In the reference implementation, this function is called poly_frommsg. You can inspect it here. Looks good?

It does! However, having loaded ‘Chekhov’s gun’ with the previous section, it’s time to fire it.

The code pattern corresponds to the expand_secure implementation. For the compiler options we mentioned, Clang emits a vulnerable secret-dependent branch in this part of the ML-KEM reference code.

So just how bad is this?

- On the one hand, the msg argument is a critical security parameter in both encapsulation and decapsulation.

- On the other hand, there’s one vulnerable branch. In decapsulation, poly_frommsg is used once. The whole decapsulation takes more than 100K cycles. Surely the timing difference produced by this one branch is too small to matter?

Well, there are two answers. The first is that research shows that sophisticated local attackers can perform high-resolution cache attacks, target the branch predictor to learn which branches are taken, or slow down the library to amplify the timing difference. So the prudent approach is to patch.

The second answer is that, actually, measuring the time it takes for a complete decapsulation is enough for an attacker to piece together the key.

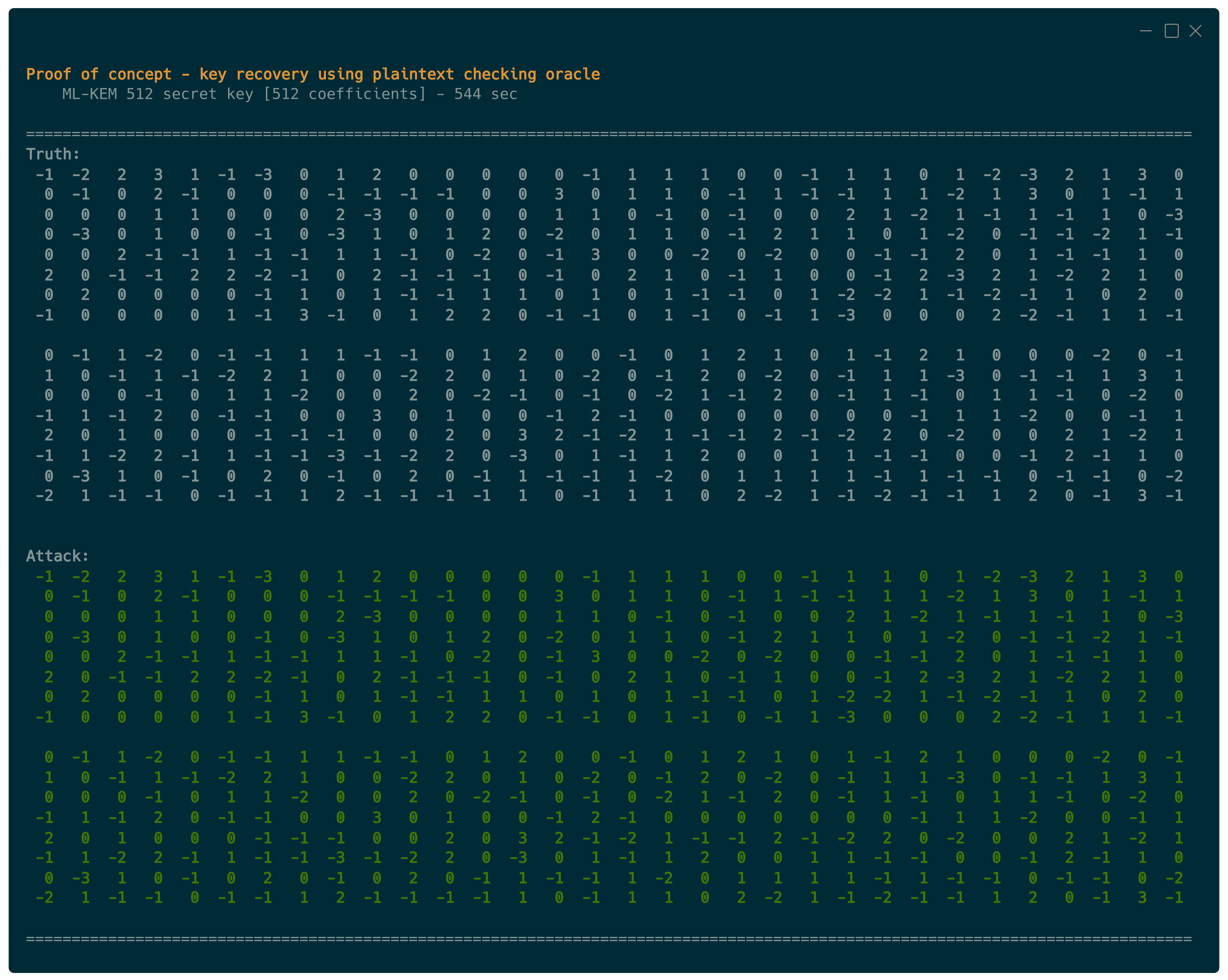

To back up this claim, we provide a practical demo that shows the role of the timing vulnerability in the recovery of an ML-KEM 512 secret key. The demo terminates successfully in less than 10 minutes on the author’s laptop.

Affected Libraries

In summary: while not all compilers, options and platforms are affected, if a given binary is affected, the security impact may be critical. Therefore, the conservative approach is to take this issue seriously, and look out for patches from your cryptography provider.

The reference implementation has been patched by implementing the relevant conditional move as a function in a separate file. This change prevents Clang from recognizing the binary nature of the condition flag, and hence from applying the optimization.

We’ve been coordinating with libraries that integrate the reference implementation. We notified liboqs, aws-lc, pq-code-package and WolfSSL in advance through their private vulnerability disclosure process. We also informed PQClean and rustpq/pqcrypto, together with a public disclosure of the issue.

It’s important to note that this does not rule out the possibility that other libraries, which are based on the reference implementation but do not use the poly_frommsg function verbatim, may be vulnerable – either now or in the future.

We’d like to thank Peter Schwabe (and the Kyber team) for their role in the swift and collaborative disclosure process, helping us coordinate with the wider PQC ecosystem.

Implementation Security Matters

PQShield cares about advancing PQC implementation maturity inside and outside of our products. Timing vulnerability testing is included in all three PQShield security profiles – Cloud, Edge, and Government – and features several complementary post-compilation constant-time checks.

Our own quantum-safe software products (PQCryptoLib, PQCryptoLib Embedded, and PQSDK) were never affected by this issue. As providers of post-quantum solutions, our goal is to stay one step ahead of the attackers, and we hope that with our focus on research, development, and implementation, we can continue to contribute solutions to potential vulnerabilities.